Slouching Toward Chatbots

July 17, 2023

Washington University just issued some sensible guidance to researchers excited about the latest AI capabilities: check accuracy, be transparent, be vigilant. A friend who works in the public sector tells me he has outsourced all the boring parts of his job to chatbots. I have begun to ask them frivolous questions: “If I loved Shalimar and Ysatis and now Baccarat Rouge, design a fragrance for me.”

We are, in other words, settling in. Shifting from jaw-dropped amazement at chatbots’ abilities and raw terror at its usurping of our jobs into more prosaic applications and more nuanced reflection. On pronouns, for example. Kevin Munger, who studies the intersections of political science, tech, and social media, suggests we stop these large language models from using first-person pronouns. Though most of the commenters on his proposal deemed this unnecessary, even silly, I think it is the most relevant, philosophically accurate tweak that could be made. We read “I” and instinctively assume personhood. We then begin to speculate about this entity along those lines—whether it thinks and feels as we do. Soon we are conversing, thanking, sassing back, testing its responses. Flirting with it. Even Anthropic, which has set out to make a safer, more socially responsible AI, has named it Claude. But a more honest syntax would answer our queries with “algorithms indicate” or “the database suggests,” or even do that old-fashioned honest thing and quote sources. And then nobody would fall in love with a personified AI and ruin their lives.

By keeping the LLMs distinctly nonhuman, we might also save civility. Exhausted by the debates on what pronouns or descriptors should be used for various individuals, my husband snapped at a tv news anchor, “How about ‘human’?” That was when it dawned on me: if all the subdivisions and differences could be accepted and set aside and the only remaining tension were human versus nonhuman, we might band together against our common frenemy. Research shows that it is far harder to be prejudiced against a group that you know contains all sorts of people. Enlarge our focus to the entire species, and we might eliminate prejudice against everything but the machines. And as we are already jealous of them as well as scared, they might as well serve as our scapegoats.

L.M. Sacasas points out that the work AI does best is work that was already “formulaic, mechanistic, and predictable.” Duh, you mutter. But his point is that we set the stage for an LLM takeover ourselves, long before the tech arrived. It is easy, now, to imagine that AI will displace us, because we have allowed much of what we do to become “so thoroughly mechanical or bureaucratic, for the sake of efficiency and scale, say, that it is stripped of all human initiative, thought, judgment, and, consequently, responsibility.” People began to behave formulaically, latching onto buzzwords and cliches, squelching spontaneous remarks, solving problems by citing manuals and procedures, holding empty meetings, making calls so scripted they talked over your response, writing reports no one would ever read. Why not outsource and automate those tasks?

As boring-but-important work—performance evaluations, grant applications, annual reports, the screening of job candidates—is relegated almost entirely to AI, though, the mystique of those documents will dissolve. Because humans are not generating them, interpreting them or jumping through their hoops, they will have, for other humans, power stripped of meaning. No human has expressed their priorities or desires or vision; no human has decided how blunt or tactful to be, how bold or humble. Even when people just copied from a template, they added a bit of themselves to the finished product. Once AI is both generating and receiving the documents, will they even seem important?

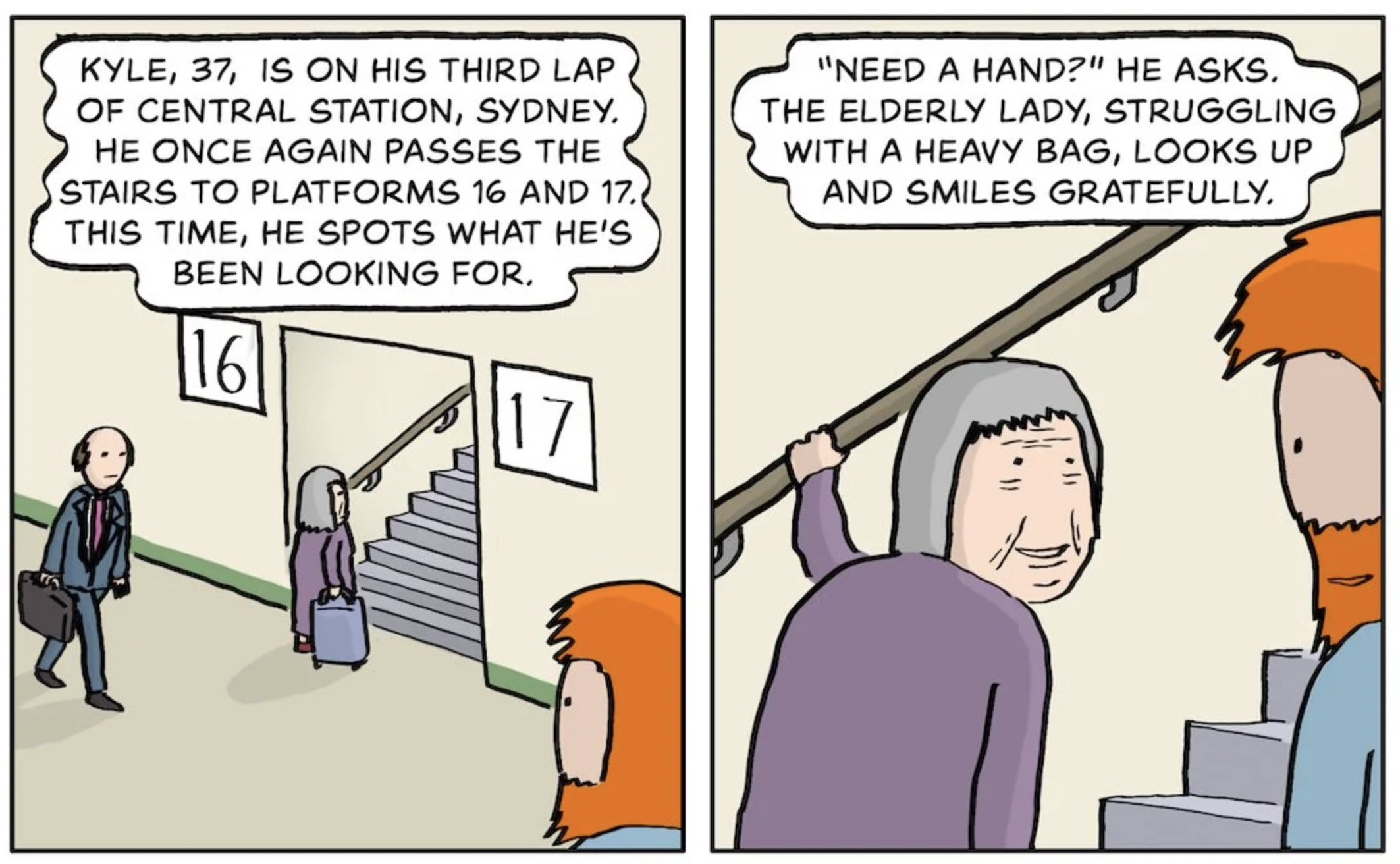

Maybe a lot of the busywork can fall away, and we can find less mechanical ways to make decisions. But the deeper problem, Sacasas writes, is that we lost confidence in ourselves decades ago. “We have already outsourced many of our core human competencies,” opting instead for a machine-like system that required less effort, deep thought, creativity, and risk. Now we are too distracted and undisciplined to work in ways no machine could emulate. Dazzled by computers’ accelerated evolution, we let ourselves depend on them until we were helpless without them, “mere consumers.” We grumble over the flowchart hell of calling customer service and being forwarded hither and yon by a succession of pick-a-number flowcharts, chatbots, taped messages, and hold music. But would we keep the receptionist who could understand someone’s needs in the first ten seconds and send them exactly where they needed to go? Too expensive. A waste. Outmoded. And—the clincher—that receptionist would need health insurance.

Those who do the most human work—nannies, artists, philosophers, nuns, schoolteachers, midwives, massage therapists, hospice workers—have traditionally been the least valued, or at least the least compensated in terms of salary and status. Yet they are the ones who should not, cannot, be replaced by AI. As for the work that can be entirely replaced by AI, it might need reconfiguring as we build ourselves back into the equation. If, that is, we can muster the chutzpah to do so.

For months now, we have all been ooohing and aaahing over split-second sonnets and analyses that strike us as profound and therefore eerily human. The “I” that gave us these wonders seems capable of virtually anything. And because it is an “I” with whom we can “converse”—complex and witty exchanges being a marvelously human trait—we forget that it is us. Machine learning might take off on its own when challenged with physical activity or a new language or proteins to decode, but those poems and analyses came from training in zillions of poems and analyses done by humans. “Oh, look how quickly it was able to assimilate and reorder all our poems about death,” would be the appropriate compliment, instead of a chilled whisper about how human it seems.

“The machine will liberate us only to the degree that it swallows itself,” Sacasas writes. “That it swallows up all of what the machine, in its longstanding technological, economic, and institutional forms, had required and demanded of us.” Instead of focusing only on the mechanical stuff, though, people are suggesting philosophically absurd uses. Let an LLM replace humans in social science research. (Would it not then become AI research?) Soothe the lonely by becoming their romantic partner. Act as companion to children unable to make human friends. Script a TV series about humans. (And yes, it can do that. And what you will end up with is a new spin on what has already been done—just like the franchises give us today, with their new iterations of the same old. They, too, have lost confidence.)

In some ways, we seem to want AI to replace us. Or at least to replace the people too foolish to fall in love with us or too impatient to adore our flaws. The pronoun is “I” to make the chatbots seem friendly, so we will converse with them, but that also encourages us to anthropomorphize them, loving them as we would a human.

And why not? We have more confidence in them than we have in ourselves.

Read more by Jeannette Cooperman here.